For AI-powered products, the difference between a good and great response often comes down to the prompt you used to modify the LLM model.

A well-crafted prompt can dramatically improve user experience, boost engagement, and unlock new possibilities for your feature. But how do you know which prompt works best?

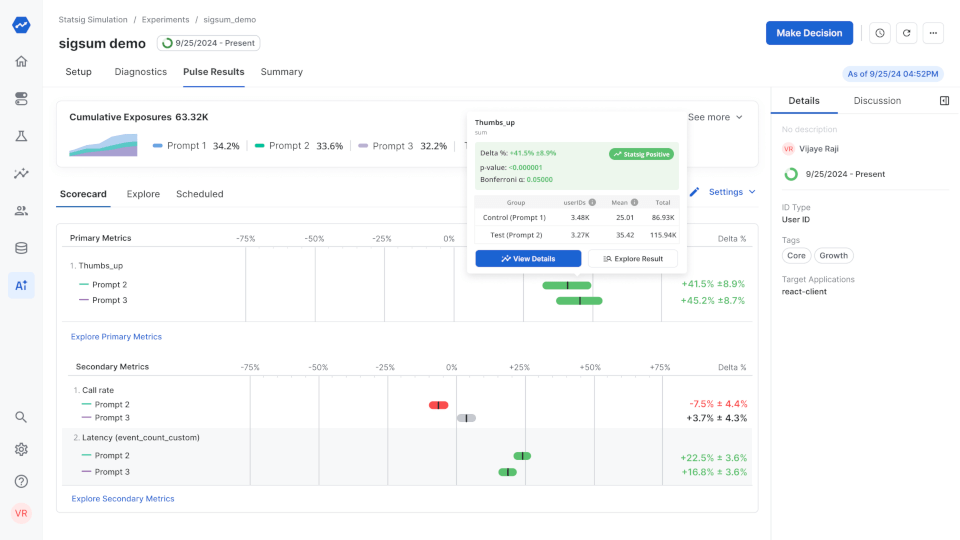

That's where our new feature comes in. Today, we're thrilled to announce Statsig's latest feature: AI Prompt Experimentation.

AI Prompt Experimentation will make it much easier for product teams to optimize their AI features quickly, bringing the power of sophisticated A/B testing to the realm of prompt engineering.

Why prompt experimentation matters

Large language models are increasingly getting commoditized: Virtually every developer today has access to the full array of best-in-class LLMs.

Given the ubiquity of cutting-edge AI tools, it's important to build a complete AI application that aligns with your business context and customer needs. Once you’ve identified a use case, you still need to choose the right model, prepare a clean dataset for RAG (retrieval augmented generation), and adjust other inputs (like temperature, max tokens, etc.)

But then comes the trickiest part: crafting the perfect prompt.

It's part art, part science, and—until now—a whole lot of guesswork. Creating the perfect prompt can make an enormous difference in the performance of your application, but it’s very hard to know if you’re getting it right.

We've seen multiple challenges when it comes to choosing the right prompt, including:

"How do I know if my prompts are actually improving user experience?"

"I'm spending hours tweaking prompts, but I can't tell if it's making a difference."

"Our team is debating prompt variations, but we don't have data to back up our decisions."

These are the problems we set out to solve. We wanted to bring the clarity and confidence of A/B testing to the world of prompt engineering.

Bridging the gap: From data scientists to prompt engineers

For years, top tech companies have leveraged complex experimentation tools to optimize their products. At Statsig, we've democratized this capability to thousands of companies across the globe.

Now, Statsig is delighted to bring this powerful tooling that was once the domain of elite data scientists directly to prompt engineers and product teams of all sizes.

Here's how it works:

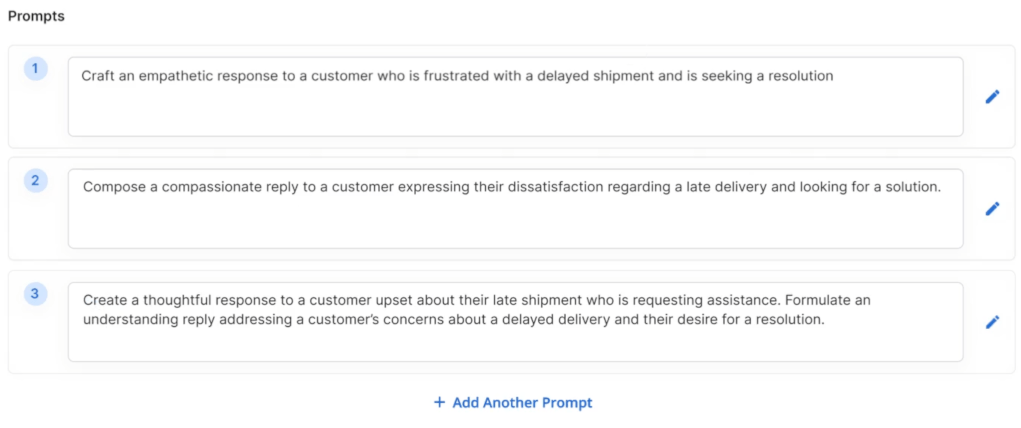

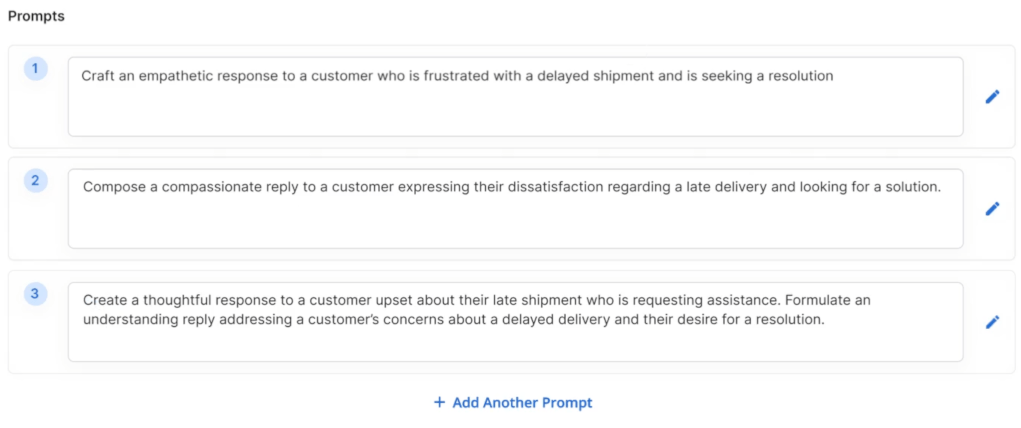

Test multiple prompts at once: Say goodbye to the days of changing one prompt and hoping for the best. Just enter prompt variants you want to test simultaneously in Statsig (we also help you generate prompt variations using AI!).

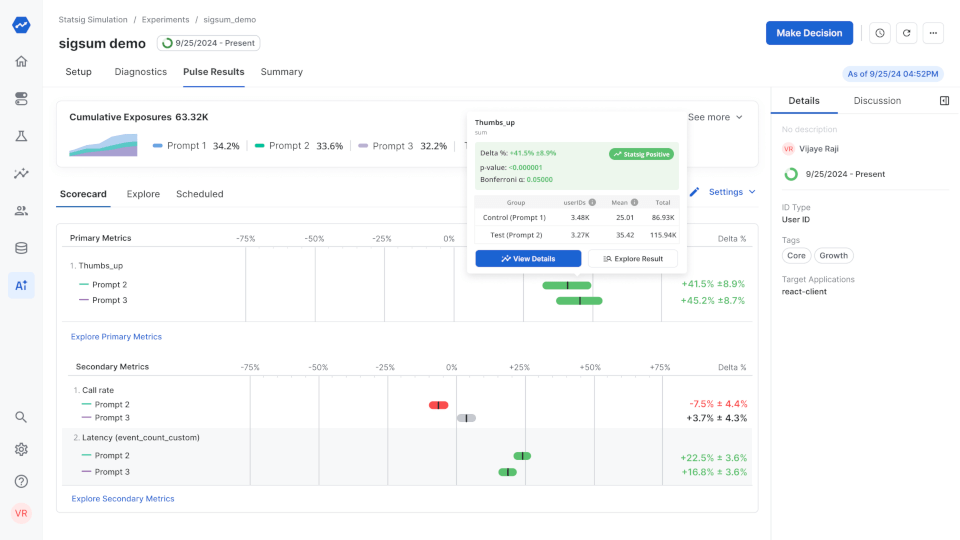

Measure what matters: Track the metrics that are important to your specific use case. Whether it's user engagement, task completion rate, or custom KPIs, we've got you covered.

Get real-time insights: Watch as the data rolls in, with easy-to-understand visualizations that help you spot winners quickly.

Collaborate seamlessly: Your whole team can access the results (we don’t charge on a per seat basis!), making it easier to align on decisions and move forward together.

Iterate with confidence: Use the insights you gain to refine your prompts further, creating a cycle of continuous improvement.

The future of AI prompt experimentation is here

As AI continues to reshape the technology landscape, the ability to rapidly test and optimize prompts will become a critical competitive advantage. And Statsig's AI Prompt Experimentation features put that power into your hands.

Whether you're an established enterprise or a scrappy startup, our platform is designed to scale with your needs, providing the insights and capabilities necessary to stay at the forefront of AI innovation.

Try Statsig AI Prompt Experiments for free

Today we've officially rolled out AI Prompt Experiments to the public. Sign up to create a free account and unlock the full potential of your AI features.

For help getting started, don't hesitate to dive into our AI prompt experimentation docs, or reach out to us with questions.

Join the ranks of forward-thinking teams who are already leveraging our platform to build the next generation of AI-powered products.

We can't wait to see what you build!

Request a demo