Experiment Review Best Practices

A surprise win?

When building out instant games on Facebook a few years back, a new developer switched to use a newer version of an internal SDK. The switch was motivated by keeping the code base supportable and it was expected to have no impact on users. Much to their surprise the total number of gaming sessions shot up! This finding was robust : it was observed over 2 weeks and had a low p-value.

A more experienced teammate noticed the change reduced time spent in the game. It’s odd for the number of sessions to rise while the time spent comes down. They looked closer and found the new library was causing the game to crash unexpectedly. The crash impacted just the game, not the Facebook app hosting it — so no red flags were triggered. Many users restarted the game when it crashed — increasing sessions/user— but this definitely wasn’t a win for users, despite the initial rush to celebrate.

Unknowingly shipping bad experiments can be terrible. They’ll stick around and may negate the wins from ten good experiments!

The scientific method

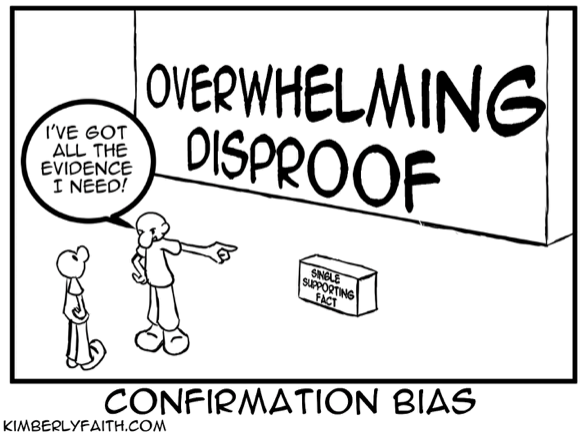

Confirmation bias is powerful. It is easy to cherry-pick test results to find data that supports your pet hypothesis. Developing good experimentation culture involves helping the team use the scientific method to avoid bias.

Like in the story above, this isn’t a natural default and won’t be solved by a one time training exercise. Establishing this culture takes active reinforcement, the ability for unbiased observers to question results and bringing diverse perspectives to looking at problems and data. Read on for ideas on how companies like Facebook did this!

The experimentation gap

☝️ The experimentation gap is real (article). Statsig was created to remove this gap.

Companies like Spotify, Airbnb, Amazon and Facebook (now Meta) internally built practical tribal knowledge to running experiments. They’ve carefully tweaked traditional academic recommendations to run 10x more parallel experiments. They bias primarily for speed of learning with their product experimentation.

Experimentation review culture

At companies like Facebook, teams as small as 20–30 establish their own experiment review practices, similar to how they formalize code review practices for the group. The specifics vary by team and maturity — but this outline is a reasonable approximation to tailor from based on need.

1. Experiment kick off

People get intimidated and flustered if you ask for a hypothesis. This is really just why! Questions to cover — Why did you make this change? What do you expect it to do? How do you think you could measure/confirm this? Capturing this at the outset helps set shared context with the teams for the next few steps.

2. Evaluating the hypothesis

You consult the initial hypothesis and see if there is evidence. If not, you can either accept the null hypothesis (your change had no effect), or see if there are alternative hypotheses that should be considered and better match the data. Depending on what you find — you then suggest a next step — ship, investigate, iterate or abandon.

The person (or people) driving the experiment publishes this evaluation to start the review conversation. Having the experiment owner drive this encourages people to own their experiment and results. It also helps people learn to read experiment data and later contribute to helping others review.

3. Critique/Reviews

Similar to code reviews, a key part of experiment review culture is getting people not emotionally attached to the experiment to review these. Reviewers also have diverse experiences that expand the perspective that people closest to the experiment might have.

Reviewers bring critical lenses including—

Is the metric movement explainable? (see story in the intro to this article)

Are all significant movements being reported, not just the positive ones? (confirmation bias makes it easier to see the impacts we want to see)

Are guardrail metrics being violated? (is the tradeoff worth it? are there non-negotiable tradeoffs?)

Is there a quota we’re drawing from? (e.g. apps might have budgets for latency or APK size that all experiments must draw from to ensure we don’t slow the app down in our pursuit of features)

Is the experiment driving the outcome we ultimately want? (once measures turn into goals, it’s possible to incent behavior that’s undesirable unless we’re prudent; see the Hanoi Rat Problem for an interesting example)

Guarding against p-hacking (or selective reporting) (often by establishing guidelines like using ~14 day windows to report results over; see more about reading results safely here.)

The composition of the review group drives how effectively they represent these lenses. Many experimentation groups feature an experienced software engineer and a data scientist familiar with the area that can help make sure that these perspectives are considered. They also have a sense of history, and when to ignore this history (see no sacred cats reference here).

4. Review Formats

Small teams often start experiment reviews as in person meetings, but these rarely scale as you make experimentation ubiquitous. Ideally — most changes are shipped via A/B tests and your process needs to scale to this.

Defaulting to offline reviews works really well! Save weekly “live” review meeting for experiments where the offline review suggests there’s interesting conversation or learning to discuss.

This makes communication more efficient — not everyone in and outside of your team can attend your experiment review meetings. Offline posts will broadcast the results to everyone and make sure everyone has an opportunity to chime in.

Writing is thinking

Effective online and offline reviews require a culture of writing the experiment proposal, learning and next steps. This requires investment by the experiment owner; but your ability to have people critically reason and share learning and insights across the organization multiplies when you do this.

5. Build a learning organization

A key outcome from running experiments is building insight and learning, that persist well past the individual experiment or feature. These insights help shape priorities, suggest ideas for future explorations and help evolve guardrail metrics. Many of these insights seem obvious with hindsight.

Examples of widely established insights that teams have accumulated—

Amazon famously reduced distractions during checkout flows to improve conversion. This is a pattern that most ecommerce sites now optimize for.

Very low latency messaging notifications and the little “so-and-so is typing” indicators dramatically increase messaging volumes in person to person messaging. A user who’s just sent a message is likely to wait and continue the conversation instead of putting their phone away, if they know the other party is responding right now.

Facebook famously found getting a new user to 7 friends in their first 10 days was a key insight to getting to a billion users. With hindsight its obvious that a user that experiences this is more likely to see meaningful feed with things that keep them coming back.

Even when things don’t go well, it’s useful to capture learning. Run an experiment that tanks key metrics? Fantastic — you’ve found something that struck a nerve with your users. That too can help inform your path ahead!

Ultimately, experimentation is a means to building a learning organization. Have a fun story on something you’ve learnt? We’d love to hear from you!