After spending the last 7.5 years in the experimentation/feature management space, something I see customers/practitioners run into all too often is basing their conclusions on tests too soon in the process.

Online experimentation is becoming more commonplace across all types of businesses today. While utilizing platforms like Statsig can significantly enhance your testing capabilities, there is a definite risk/reward when basing your decisions on early test results.

In this blog post, we will cover some things to remember when looking at early test results and features within Statsig to help you feel confident when shipping a winning variant.

Risk vs. Reward

When making decisions early in the lifecycle of an experiment, there are some relatively simple risk and reward tradeoffs:

Risks:

Novelty effects: Novelty effects may influence early results, where the initial response to a change does not indicate long-term behavior. Think of tests on new products that might have gone viral over the weekend or just started an ad campaign. This can also lead to number 2.

Noisy data: Early data can be noisy and may not represent the true effect of the experiment, leading to incorrect conclusions (higher likelihood of false positives/false negatives).

Rewards:

Early insights: Early results can provide quick feedback, allowing for faster iteration and improvement of features.

Resource allocation: Identifying a strong positive or negative trend can help decide whether to continue investing resources in the experiment.

Acting on limited data can be risky. If you make choices too soon, you might think something works when it doesn't or kill something that does work, leading to false positives/negatives.

This oversight can mislead teams, causing them to waste time and resources, overlook important findings, and make plans that don't match what users want or what's best for the business.

Let’s explore some ways you can get the compounding reward of making faster decisions while mitigating much of the risk.

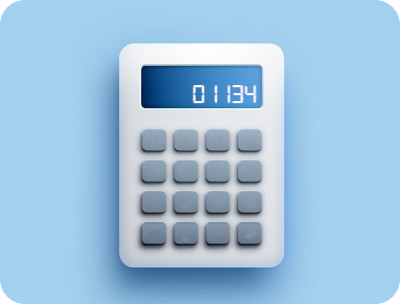

Power analysis and its importance

Before running an experiment, knowing how long it should run and how many participants you need to obtain reliable results/desired MDE is crucial. This is where power analysis comes into play - it helps you not waste cycles on impossible experiments and set the correct expectations for experiment durations.

Power analysis helps you determine the sample size required to detect an effect of a certain size with a given level of confidence.

Statsig's Power Analysis Calculator is a tool designed to make this process straightforward, ensuring that your experiments are neither underpowered (risking missing a true effect) nor overpowered (wasting resources).

Here is a quick breakdown of the Power Analysis Calculator within the Statsig console:

From this screenshot, you can see that we are supplying some information to the calculator, and it will send out a summary of the result. Statsig will simulate an experiment based on your inputs, calculating population sizes and the relative variance based on historical behavior.

To use Statsig's power analysis tool and interpret the results, follow these steps:

Select a population: Choose the appropriate population for your experiment. This could be based on a past experiment, a qualifying event, or the entire user base.

Choose metrics: Input the metrics you plan to use as your evaluation criteria. You can add multiple metrics to analyze sensitivity in your target population.

Run the power analysis: Provide the above inputs to the tool. Statsig will simulate an experiment, calculating population sizes and variance based on historical behavior.

Review the readout: Examine the week-by-week simulation results. This will show estimates of the number of users eligible for the experiment each day, derived from historical data.

Adjust if necessary: If the estimated sample sizes are not achievable, consider adjusting the MDE or extending the experiment duration to collect sufficient data. Statsig's Power Analysis Calculator is helpful for planning and running experiments with confidence. By understanding the relationship between sample size, effect size, and statistical power, you can design experiments that are more likely to yield meaningful insights.

Statistical significance calculator

The allure of early results and the importance of patience

The temptation to make swift decisions based on early experiment data is understandable. Early results can suggest a clear path forward, offering the promise of speed to market and development efficiencies. The potential benefits are alluring: a faster iteration cycle, quick validation of ideas, and the ability to pivot or ship quickly.

One of the most critical aspects of the analysis stage of experimentation is patience. A good rule of thumb is: patience leads to confidence.

Confidence is key when examining test results, and Statsig provides a robust framework to ensure that the insights you derive from your experiments are reliable and actionable.

Statsig’s advanced statistical engine employs methodologies that can help reach statistical significance faster, like sequential testing and CUPED. You can also run Multi-armed Bandits to accelerate experimentation cycles. These techniques enable us to deliver precise and trustworthy results to our customers.

Read our customer stories

1. Sequential testing

Sequential testing is designed to enable early decision-making in experiments when there's sufficient evidence, while also limiting the risk of false positives. This method is particularly useful for identifying unexpected regressions early and distinguishing significant effects from random fluctuations. It allows for the evaluation of experiment results at multiple points during the experiment without increasing the risk of false positives, which is beneficial to prevent premature evaluation, known as 'peeking'.

When enabled, sequential testing applies an adjustment to the p-values and confidence intervals to account for the increased false positive rate associated with peeking. This means that if an improvement in key metrics is observed, an early decision could be made, which is especially valuable when there's a significant opportunity cost to delaying the experiment decision. However, caution is advised as early stat-sig results for certain metrics don't guarantee sufficient power to detect regressions in other metrics.

2. CUPED (Controlled-experiment using pre experiment data)

CUPED is a statistical technique that enhances the accuracy and efficiency of running experiments by leveraging pre-experimental data to reduce variance and pre-exposure bias in experiment results. By using information about an experiment's users from before the experiment started, CUPED adjusts the user's metric value, which helps to explain part of the error that would otherwise be attributed to the experiment group term. This reduction in variance can lead to significant benefits for experiments:

It can shrink confidence intervals and p-values, which means that statistically significant results can be achieved with a smaller sample size.

It reduces the duration required to run an experiment because less data is needed to reach conclusive results. However, CUPED is most effective when the pre-experiment behavior is correlated with the post-experiment behavior and is not suitable for new users without historical data.

The below graphic shows how much CUPED would reduce required sample sizes (and experiment run times) due to lower metric variance. This is based on 300+ customers utilizing CUPED within a Statsig experiment.

3. Multi-armed bandits

Multi-armed bandit (MAB) experiments help experiment faster and more safely by dynamically adjusting the traffic allocation towards different variations based on their performance.

Unlike traditional A/B testing, where traffic is split evenly and the allocation does not change during the test, MAB algorithms continuously shift more users to better-performing treatments. This maximizes the potential reward (e.g., conversions) during the experiment itself, rather than waiting until the end to implement the best variation.

As a result, MABs can reduce the opportunity cost of showing sub-optimal variations to users and can be particularly useful for continuous experimentation without a fixed end date. They also minimize risk by progressively allocating less traffic to underperforming treatments.

However, MABs typically optimize for a single metric, which is a consideration when planning experiments.

Conclusion

While early experiment results can be informative, they should always be taken with a grain of salt. A disciplined approach to experimentation, supported by robust tools like Statsig, is essential for making informed decisions that drive your product and business forward.

Get started now!