I’m not really a “manual labor” guy…

Not to say I won’t rake some leaves, build a desk, or splice buttons onto my PS5 controller. I’m talking about copying and pasting, rephrasing, categorizing, and other tedious, manual tasks.

There’s a tired old trope about how AI is not good enough to "replace people." For the first six months of ChatGPT launching, Linkedin was utterly packed with posts about how bad ChatGPT was compared to copywriters, laughing at how Midjourney couldn’t draw hands, and so on.

Marketers were especially spiteful in their relentless criticism of the emerging technology. It’s great content for generating clicks, but completely missing the point of AI. It is my opinion that AI was never here to replace anyone, but rather to augment and assist.

AI assists me with the manual labor I so dread—the tasks that make me feel less like a marketer and more like an LLM myself.

Here’s how:

My new friend, Statbot AI

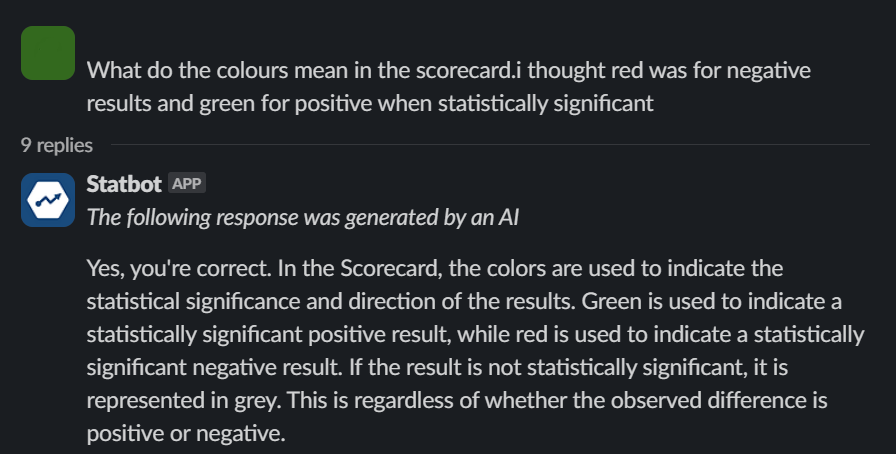

People love Statsig’s customer support. One of the coolest things about our Slack community is that users are encouraged to ask (and answer) questions, and generally communicate about data science and experimentation ideas.

Our support team is quick to respond, but sometimes users are looking for immediate help with an issue they’re facing. Thanks to the ingenuity of Alex Boquist, Kenny Yi, and Joe Zeng, Statbot was born to address this need.

Statbot is an AI model trained on our documentation and plugged into our Slack community as an app. When a user asks a question, it answers immediately, based on its training data. Consider it an in-app documentation expert.

Statbot is powered by Scout, and is publicly interactable in our Slack community—but there are also a few features for AI developers in our Scout dashboard, which I’ll show later.

How Glossarybot was created

Why a glossary?

Statsig is full of help. Our website documentation is comprehensive and includes walkthroughs and guides on tons of relevant topics:

And while our docs are comprehensive, they don’t expressly provide simple, one-off answers to commonly asked questions. Sometimes somebody just wants a 2-3 paragraph explanation of what a canary launch is.

Glossary entries are usually used as an offhand definition to link to (for readability) in blog posts and other places where a deep dive—like a link to setup documentation—isn’t necessary or relevant.

But in terms of prioritization, writing a glossary would take weeks, and there are much better things to spend that time on…

…Unless? 👀

The hackathon

Hackathons are deeply ingrained in Statsig culture, a throwback to the old days at Meta—where many of our engineers met. Above all else, hackathons are a chance for everyone to take two days to freely work on whatever projects they choose.

By this time, our lack of a glossary had been in the back of my mind for almost a year. For me, this hackathon was an opportunity to finally address it.

For the glossary project, I partnered with Alex from Scout, Sid, and Vijaye to create a curation, writing, and publishing workflow for glossary entries. Curation is easy. Publishing is simple for our front-end developer. The writing part, however…full of manual tasks.

Configuring an AI Glossarybot

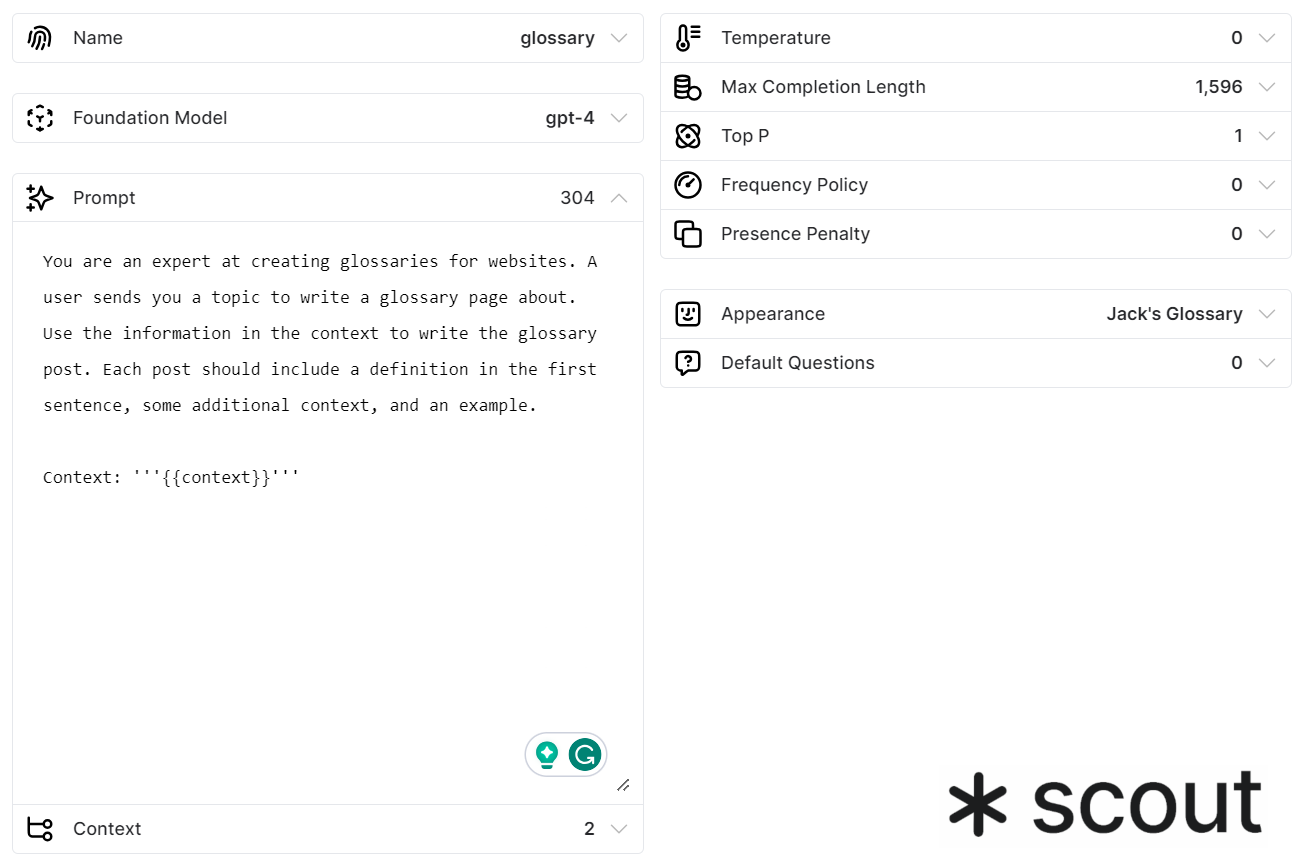

Using Scout's features, Alex and I were able to create a “new” Statbot expressly designed to help write glossary entries. Glossarybot is a GPT-4 model model at its core, trained on Statsig docs and blog posts. Let’s break it down as it appears in the Scout dashboard:

Foundation model: Determines which GPT model is used to generate responses. GPT-4 is great for readability.

Prompt: Consider this the bot’s verbal advice: A user will be giving it input, and it should use the prompt as a basis for generating output.

Context: The source material on which the model is trained. For Glossarybot, it’s a split between Statsig’s docs and blog, with a 2:1 emphasis given to docs.

Temperature: Determines how random a model’s output is. Higher temperature is riskier, lower temperature plays it safe.

Max completion length: Sets an upper limit on response length

From here, it’s just a matter of giving Glossarybot topics to write about, and then providing minimal edits and tweaks to its output. Bada bing, bada boom, as they say.

👉 Related reading: How to optimize model temperature with Autotune

Get a free account

How much time did this save?

The balance between leveraging AI for manual tasks and still applying yourself elsewhere isn’t as tricky as one might think. There is already a plethora of things AI can do better than humans. To no one’s surprise, referencing every single blog post and documentation entry is one of them.

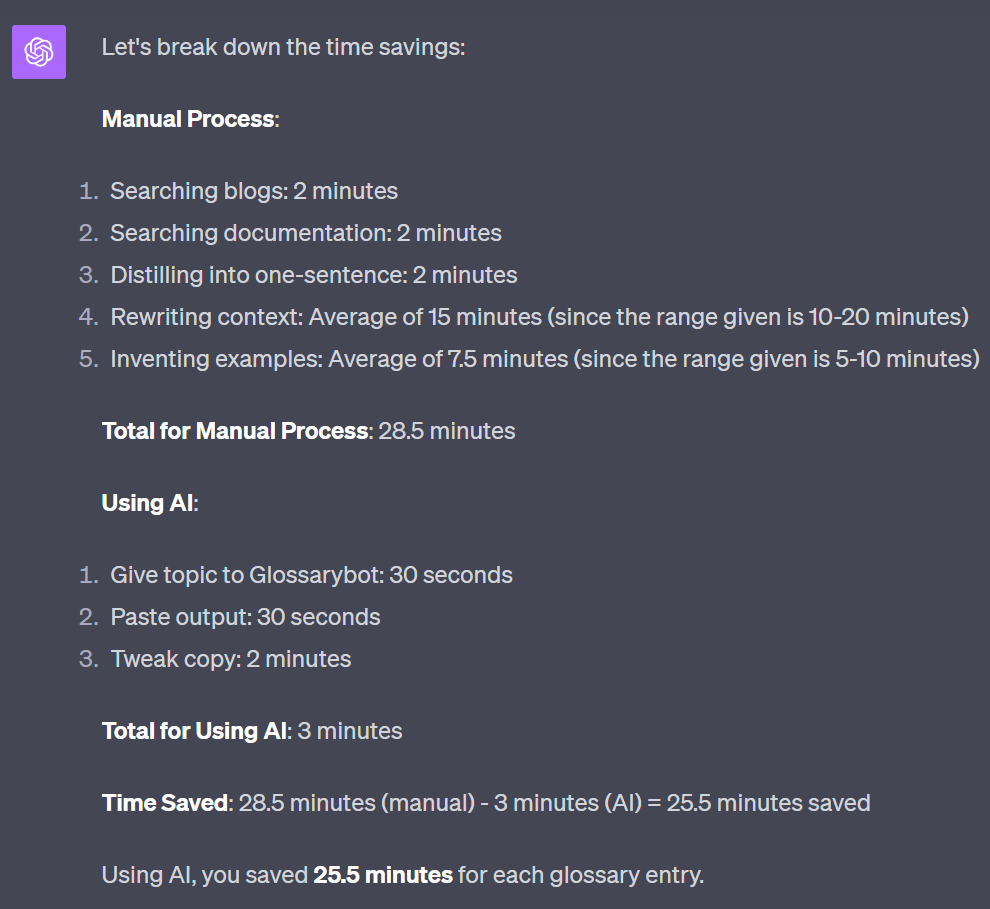

Without using AI, the manual process of creating a glossary entry would’ve been something like this:

(2 minutes) Search our blogs for the target topic

(2 minutes) Search our documentation for the target topic

(2 minutes) Distill the target topic into a one-sentence definition

(10-20 minutes) Rewrite any related context based on the source material

(5-10 minutes) Invent an example or two

Using AI, the process becomes:

(30 seconds) Give Glossarybot a topic

(30 seconds) Paste the output into a document for formatting

(2 minutes) Tweak copy for readability

Thanks to Glossarybot, I was able to burn through 60 glossary entries in a day. This time included choosing topics, formatting posts, and participating in other hackathon projects as well.

As a general rule: Of course AI cannot write as well as a dedicated writer. Comparing a GPT blog post to one that a copywriter wrote—and then judging the outputs’ content quality—feels like deliberately missing the point. Simple solution: Don’t have AI write your content from scratch.

Remember, LLM output will always be largely neutral. Humans have the advantage of being subject matter experts, having insight into the proper voice and tone, etc., and will always outperform even the most well-trained AIs when it comes to content quality. AI, however, is absolutely heroic when used for the things it’s good at.

Bonus: Other ways to use AI right now

At Statsig, we embrace AI, and have found ways to weave it into many of our existing workflows. I personally use AI to reduce the manual work associated with the following:

Using Midjourney to create images that aren’t important enough to require the Statsig branding team (ie backgrounds, simple illustrations, the cover image for this blog post, etc).

Training ChatGPT on examples of social media posts so that it can help craft new ones based on inputs (sharing a blog post, advertising an event).

Leveraging Notion AI to make sweeping edits to copy, like removing title case, adjusting spacing, and more.

Using Notion AI to automatically generate project tacking tables, Kanban boards, and more, to speed up project management backend work.

Using any AI to help generate advanced spreadsheets and dashboards, and to help with formatting. (Sometimes splitting by comma just isn’t enough.)

Follow Statsig on Linkedin

Related AI resources:

Online experimentation: The new paradigm for building AI applications

Lessons from Notion: How to build a great AI product even if you’re not an AI company

AI Experimentation: How to optimize model temperature with Statsig’s Autotune

Video: How to optimize the performance of AI-powered features using Statsig