Envision the following:

This quarter you launched your first great product.

Your team designed the feature well, you set ambitious business targets, you built the feature well, and designed a solid A/B test to measure the results. You launched the product as a 50/50 user split, using Statsig to assign users and analyze the results.

You get the results, and they’re amazing! Your primary metric even improved more than the goal you had set. Time to celebrate and just wait for that promotion to come, right?

Be careful, because there’s a hidden trap door waiting for you: It turns out your new feature had some unintended side effects, and three months later you discover your costs have tripled. That path to promotion is now an expensive problem you have to explain to leadership.

This type of scenario is not uncommon when launching features. Unintended consequences are a universal constant, and even when our primary metrics look great we should be open to the possibility not everything is peachy.

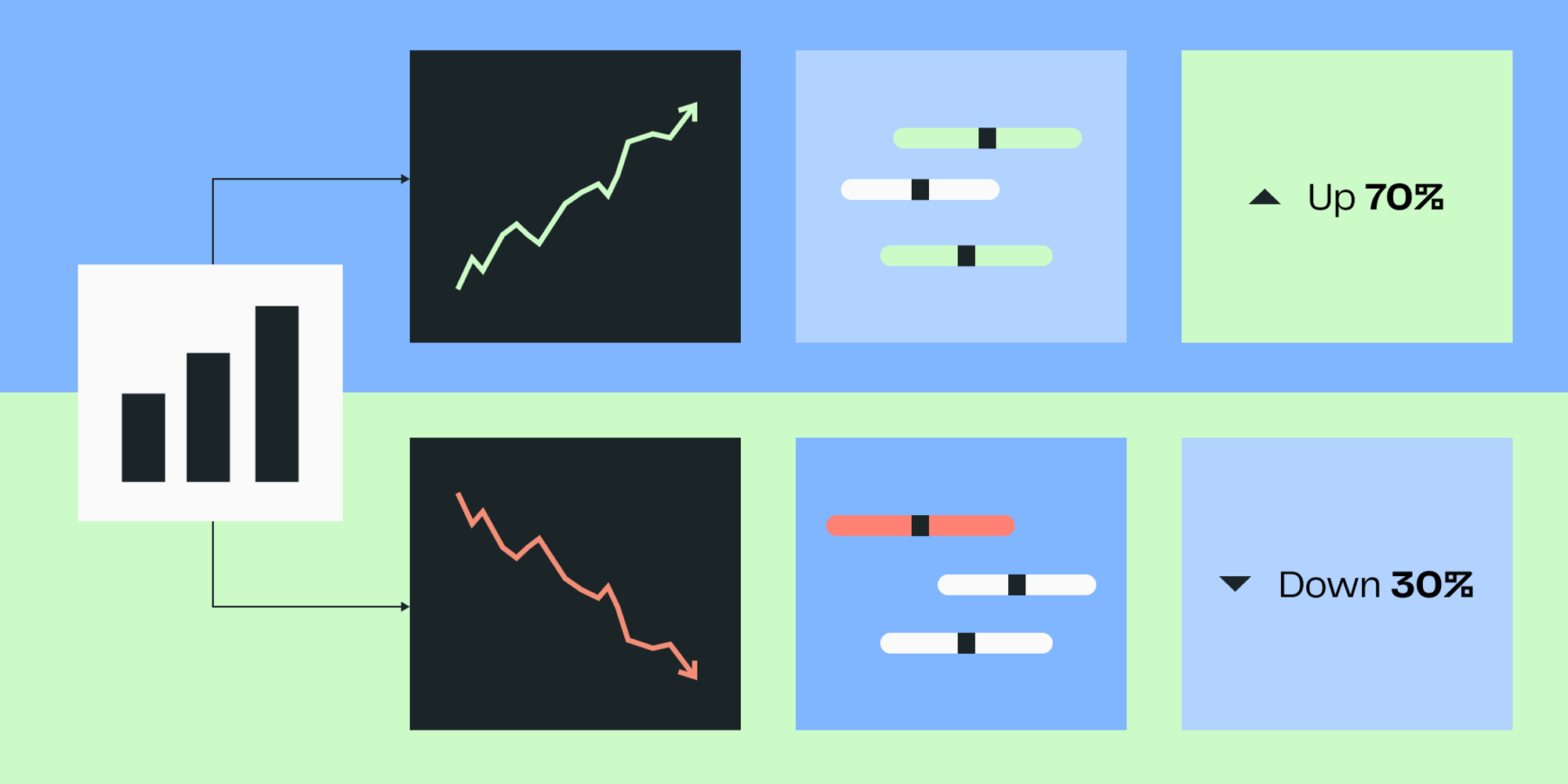

Guardrail metrics are a simple yet powerful way of double-checking our changes.

Like a guardrail that keeps your car on the road, they are your safety net. While you aim to improve specific aspects of your product through A/B testing, you shouldn’t compromise on the overall system and business health. These metrics are crucial in maintaining a balanced approach to innovation and stability.

Introduction to guardrail metrics in A/B testing

Guardrail metrics, often overlooked, play a pivotal role in the ecosystem of A/B testing. They are the metrics that ensure you don't veer off the path while chasing primary goals. These metrics monitor critical aspects of your product that could be negatively affected by the changes tested. For instance, while you might test a new feature to increase user engagement, guardrail metrics could include monitoring system performance or user churn rates.

The importance of these metrics cannot be overstated. They help maintain the integrity and balance of your experiments by:

Ensuring that gains in one area do not cause losses in another

Providing a holistic view of the impact of your tests

Implementing guardrail metrics effectively guards against potential negative side effects, preserving user experience and system functionality. This strategic approach not only saves resources but also shields your product from unintended consequences.

Primary metrics vs. guardrail metrics

In A/B testing, primary metrics and guardrail metrics serve distinct, complementary functions.

Primary metrics are your target outcomes, directly tied to the experiment's objectives. These are the things you’re actively trying to move. For example, if you're testing a new user interface, your primary metric might be the click-through rate on a feature button.

Guardrail metrics, on the other hand, safeguard the broader health of your product. While you focus on boosting that click-through rate, guardrail metrics could include monitoring page load times and user error rates. These metrics ensure that improvements in one area do not degrade overall user satisfaction or functionality.

Together, these metrics form a balanced strategy, optimizing performance while maintaining a quality user experience. By monitoring both, you ensure that your A/B tests improve specific features without compromising the system's integrity. This dual focus helps in making informed, impactful decisions that benefit both the product and its users.

Not just for mistakes

It is critical to know that guardrail metrics are not just for finding errors. A degradation in one does not mean you made a mistake. Degradations can be due to:

Complex systems

Downstream effects

Interactions with other features

Delayed effects

Guardrail metrics are your insurance policy, not a blame game. They’re a tool to help you find issues faster, and ideally, you’ll find a simple fix or rethink your product in a way that improves outcomes even more.

Real-world examples

Airbnb uses guardrail metrics to balance user experience with business growth during their experiments. For instance, while testing changes aimed at increasing bookings, they monitor guest satisfaction scores closely. This ensures that efforts to boost one performance metric do not adversely affect overall customer satisfaction.

Netflix, on the other hand, applies guardrail metrics like stream start times and buffering ratios. These metrics help ensure that while they test algorithms for personalized recommendations, the core user experience—smooth streaming—remains unaffected. This strategy prevents potential subscriber drop-off due to performance issues during tests.

At Uber, I used guardrail metrics liberally to ensure that a new matching algorithm to improve trip conversion rate (share of requests that get completed as a successful trip) doesn’t accidentally succeed simply by reducing the number of overall rider requests.

Selecting effective guardrail metrics

There’s no one-size-fits-all algorithm to select your metrics. You’ll rely on knowledge of your product, your users, your business, and your data to make a decision about what to measure.

What to add:

One strategy is to choose guardrails from among your core business objectives and consider which metrics reflect the health of your product or service. For example, if customer retention is key to your product success- regardless of what’s changing in your A/B test, you could add metrics like user churn or session duration during all your tests.

Another strategy is to anticipate what could go wrong in a launch. For example, what are the potential drop-offs in a funnel, and if one of these degraded could it make your primary metrics accidentally look better? That’s a great opportunity to double-check that your results really are as good as they look.

Others will prioritize guardrails that would identify technical issues. A degraded page load time, an increase in user retries, and an increased bug report rate are all classic examples.

When to be restrained

Guardrails are powerful, but more is not always better. And sometimes your desired guardrail will simply never be useful.

Risk of noise

Every metric in an A/B test is subject to real-world noise, and random chance will result in some “statsig” detections that are just noise. Remember, when you design your experiment with a significance of 5% (i.e. confidence 95%), you should plan that 5% of metric results on your readout will be “statsig” different even if no real difference exists.

The more metrics you add to an experiment (of any type) will mean more chances that any single metric is noisy. Always ensure that your guardrail metrics provide clear insights without causing data overload. Too many metrics can cloud decision-making.

Sensitivity and statistical power limitations

There’s no guarantee that your experiment will have the sensitivity to measure deltas in every guardrail metric. You likely designed your experiment sample size based on a primary metric; however, this metric’s variance and might look entirely different than a guardrail’s.

You might be set up to measure primary metric MDEs of 1%, but that business-critical guardrail would only be statistically detectable at change of 10, 20, even 30%. This is reflected in huge CIs around the metric.

In these cases, it’s best to choose guardrails that are meaningful and well-powered at the sample sizes you expect in your experiment. You can run secondary power analyses (after your primary power analysis) to check if guardrails are even meaningful for a given design sample size.

Defining meaningful thresholds for guardrails

Related to ensuring guardrails are well-powered, when you set a guardrail metric have some prior of idea of “how bad is bad enough” to be concerned.

For example, if your A/B product launch is accompanied by a 1% slow down in page load times, is this concerning enough to you to roll back your changes? Do the benefits of the launch outweigh the slow down, or is this 1% a huge concern?

You’ll have to lean on knowledge of your particular business and product to make these decisions for each launch.

Implementing and monitoring guardrail metrics on Statsig

Setting up guardrail metrics in Statsig is straightforward: they are just normal metrics! Add your chosen guardrail metrics to the “Secondary Metrics” section in your experiment setup.

Once you’ve done this and launched your experiment, there are a few ways you can monitor.

Option 1: Bug detection

The classic A/B test is designed to only be checked and analyzed once. Under this design, you shouldn’t be tempted to end experiments early, and it’s often best practice to avoid “peeking” at your metrics in general. This helps you avoid the temptation to declare your product a success due to early, noisy data.

However, an exception can generally be made for checking guardrail metrics and checking for obvious bugs. You might want to check on your metrics to ensure they haven’t tanked. This can help identify issues sooner that entirely break an experience or user flow. Outright-breaking changes should be easy to see if you’ve chosen metrics that monitor core product success, as the deltas should be large and obvious.

💡Pro tip: Use Statsig’s Alerts++ feature to automate this monitoring for you. Set reasonable thresholds that would represent obvious regressions that should stop the test. With these enabled, you can avoid the temptation to look at your other metrics and make early decisions.

Beyond checking for bugs, however, try to avoid even looking at primary metrics. Ignore any promising results you see, trust your design, and let the experiment run through its design duration.

Option 2: Continuous monitoring

An alternative A/B test plan is to use Sequential Testing to allow continuous monitoring. If you’ve planned for sequential testing, you can look at results as much as you want and still avoid increasing the chances of false discovery.

By keeping a close eye on these metrics, you ensure that any significant changes do not go unnoticed. This proactive approach helps maintain the integrity of your product experience and business health. Regularly check the dashboard and adjust your strategies as needed.

Request a demo